Marc Leavitt is Director, Product Marketing for QLogic’s Storage Solutions Group (SSG). Marc has more than 30 years in high-tech marketing, sales and engineering positions developing successful, customer-facing programs that drive revenues and customer satisfaction.

MARC LEAVITT

MARC LEAVITTQLogic

For IT managers, increased server performance, higher virtual machine density, advances in network bandwidth, and more demanding business application workloads create a critical I/O performance imbalance between servers, networks, and traditional storage subsystems. Storage I/O is the primary performance bottleneck for most virtualized and data-intensive applications. While processor and memory performance have grown in step with Moore’s Law, storage performance has lagged far behind. This performance gap is further widened by the rapid growth in data volumes that most organizations are experiencing today. For example, IDC predicts that the amount of data volume in the digital universe will increase by a multiple of 44 over the next 10 years.

Following industry best practices, storage is consolidated, centralized, and located on storage networks (i.e., Fibre Channel, Fibre Channel over Ethernet [FCoE], and iSCSI) to enhance efficiency, compliance policies, and data protection. However, network storage design introduces many new points where latency can be introduced, which increases response times, reduces application access to information, and decreases overall performance. Simply put, any port in a network that is over-subscribed can become a point of congestion.

As application workloads and virtual machine densities increase, the pressure increases on these potential hotspots, and the time required to access critical application information also increases. Slower storage response times result in lower application performance leading to lost productivity, more frequent service disruptions, lower customer satisfaction, and ultimately, a loss of competitive advantage.

Over the past decade, IT organizations and suppliers have employed several approaches to address congested storage networks. These approaches have helped organizations avoid the risks and costly consequences of reduced access to information and the resulting under-performing applications. Other than refreshing the storage infrastructure periodically to improve storage performance, the solutions to the performance challenges today center around flash-based caching. An effective solution would need to be simple to install/manage and work in an existing SAN with no topology changes.

Flash Memory for Storage Performance

In the last few years, flash memory has emerged as a valuable tool for increasing storage performance. Flash outperforms rotating magnetic media by orders of magnitude when processing random I/O workloads. As a rapidly expanding semiconductor technology, unlike disk drives, we can expect flash memory to track a Moore’s Law- style curve for performance and capacity advances.

To improve system performance, flash has been primarily packaged as solid-state disk (SSD) drives that simplify and accelerate adoption. Although SSDs were originally packaged to be plug-compatible with traditional, rotating, magnetic media disk drives, they are now available in additional form factors, most notably server-based PCI Express®. This has led to the introduction of server-based caching as a storage acceleration option.

Server-based Caching

Adding caches to high I/O servers places the cache in a position where it is insensitive to congestion in the storage infrastructure. The cache is also in the best position to integrate application understanding to optimize application performance. Server-based caching requires no upgrades to storage arrays, no additional appliance installation on the data path of critical networks, and storage I/O performance can scale smoothly with increasing application demands. As a side benefit, by servicing a large percentage of the I/O demand of critical servers at the network edge, SSD caching in servers effectively reduces the demand on storage networks and arrays. This demand reduction improves storage performance for other attached servers and can extend the useful life of existing storage infrastructure.

On the other hand, there are drawbacks to server-based caching. While the current implementations of server-based SSD caching are very effective at improving the performance of individual servers, providing storage acceleration across a broad range of applications in a storage network is beyond their reach. As currently deployed, server-based SSD caching:

- Does not work for today’s most important clustered architectures and applications.

- Creates silos of captive SSDs that make SSD caching much more expensive to achieve a specified performance level.

- Necessitates complex layers of driver and caching software, which increases interoperability risks and consumes server processor and memory resources.

- Poses the threat of data corruption due to loss of cache coherence, which creates an unacceptable risk to data processing integrity.

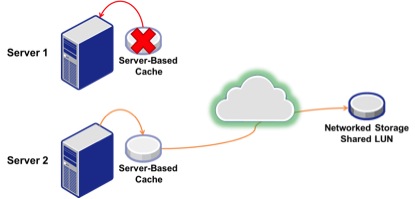

To understand the clustering problem, let’s examine the conditions required for successful application of current server-based caching solutions, as seen in Figure 1.

Figure 1. Server Caching Reads from a Shared LUN

Figure 1 shows server-based caching deployed on two servers that are reading from overlapping regions on a shared LUN. On the initial reads, the read data is returned to the requestor, which is also saved in the server-based cache for that LUN. All subsequent reads of the cached regions are serviced from the server-based caches, providing a faster response and reducing the workload on the storage network and arrays.

This scenario works very well provided that read-only access can be guaranteed. However, if either server executes writes to the shared regions of the LUN, cache coherence is lost and the result is nearly certain data corruption, as shown in Figure 2.

Figure 2. Server Write to a Shared LUN Destroys Cache Coherency

In this case, one of the servers (Server 2) has written back data to the shared LUN. However, without a mechanism to support coordination between the server-based caches, Server 1 continues to read and process now-invalid data from its own local cache. Furthermore, if Server 1 proceeds to write processed data back to the shared LUN, data previously written by Server 2 is overwritten with logically corrupt data from Server 1. This corruption occurs even when both servers are members of a cluster because, by design, server-based caching is transparent to host systems and applications.

The scenario illustrated in Figure 2 assumes a write-through cache strategy where writes to the shared LUN are sent immediately to the storage subsystem. An equally dangerous situation arises if a write-back strategy is implemented where writes to the shared LUN are cached.

Figure 3 shows the cache for both servers configured for write-back. In this example, as soon as Server 1 performs a write operation, cache coherence is lost because Server 2 has no indication that any data has changed. If Server 2 has not yet cached the region that Server 1 has modified, and it does so before Server 1 flushes the write cache, the data read from the shared LUN becomes invalid and logically corrupt data is again processed.

Figure 3. Cached Server Writes Destroy Cache Coherence

A New Approach

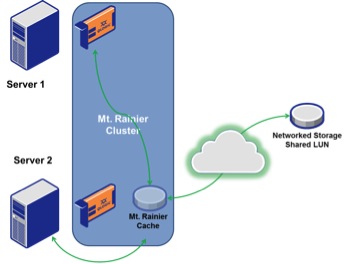

The industry has now produced a new solution that simultaneously eliminates the threat of data corruption due to loss of cache coherence, while enabling efficient and cost-effective scaling and pooling of SSD cache resources among servers.

Clustering

Clustering in the new solution creates a logical group that provides a single point- of-management and maintains cache coherence with high availability and optimal allocation of cache resources. When clusters are created, one cluster member is assigned as the cluster control primary and another as the cluster control secondary. The cluster control primary is responsible for processing management and configuration requests, while the cluster control secondary provides high availability as a passive backup for the cluster control primary.

Figure 4 shows a four-node cache adapter cluster defined with member #1 as the cluster control primary and cluster member #2 as the cluster control secondary (cluster members #3 and #4 have no cluster control functions).

Figure 4. Cluster Control Primary and Secondary Members

When a primary fails or is otherwise unable to communicate with the other members of a cluster, the secondary is promoted to primary, and it then assigns a new secondary.

When the former primary comes back online and rejoins the cluster, it learns of the new primary and secondary configuration, and rejoins as a regular, non-control member.

Independent of cluster control functions, pairs of cluster members can also cooperate to provide synchronous mirroring of cache or SSD data LUNs. In these relationships, only one member of the pair actively serves requests and synchronizes data to the mirror partner (that is, the mirroring relationship is active-passive).

LUN Cache Ownership

LUN cache ownership guarantees that at any time, only one cache is actively caching any specified LUN. Any member of a cluster that requires access to a LUN that is cached by another cluster member redirects the I/O to the specific LUN’s cache owner, as illustrated in Figure 5.

Figure 5. LUN Cache Ownership Supporting Read Operations

In Figure 5, caches are installed in both servers and are clustered together. Server 2 is then configured as the LUN cache owner for a shared LUN. When Server 1 needs to read or write data on that shared LUN, participation in the storage accelerator cluster tells the cache on Server 1 to redirect I/O to the cache on Server 2. This I/O redirection:

- Guarantees cache coherence

- Is completely transparent to servers and applications

- Works for both read and write caching

- Consumes no host processor or memory resources on either server

- Delivers much lower latency than accessing an HDD LUN

These characteristics are essential to the active-active clustering applications and environments that are most critical to enterprise information processing. Without guaranteed cache coherence, applications such as VMware ESX clusters, Oracle Real Application Clusters (RAC), and those that rely on the Microsoft Windows Failover Clustering feature simply cannot run with server-based SSD caching. Application transparency enables painless adoption and the shortest path to the benefits of caching accelerated performance.

For active-active applications with “bursty” write profiles, write-back caching offers enormous performance benefits. Encapsulating all SSD processing within caches leaves host processor and memory resources available to increase high-value application processing density (for example, more transparent page sharing and, more virtual machines).

Because only one cache is ever actively caching a LUN and all other members of the accelerator cluster process all I/O requests for that LUN through that LUN cache owner, all storage accelerator cluster members work on the same copy of data. Cache coherence is maintained without the complexity and overhead of coordinating multiple copies of the same data. Furthermore, by enforcing a single-copy-of-data policy, the clustering efficiently uses the SSD cache, and intra-cluster cache coordination allows more storage data to be cached with fewer SSD resources consumed.

This cache utilization efficiency greatly improves the economics of server cache deployment and, combined with guaranteed cache coherence, enables adoption of server-based SSD caching as a standard configuration. Because the caches are server-based, with proper configuration they can take advantage of the abundant available bandwidth at the edges of storage networks, allowing them to avoid the primary sources of network latency: congestion and resource contention.

When used in properly designed server caches, flash has the potential to significantly improve storage performance. When caches are coordinated among servers and data corruption problems are solved, server caching becomes a powerful weapon in the battle for higher I/O performance.

Industry Perspectives is a content channel at Data Center Knowledge highlighting thought leadership in the data center arena. See our guidelines and submission process for information on participating. View previously published Industry Perspectives in our Knowledge Library.