Why Microsoft Has Bet on FPGAs to Infuse Its Cloud With AI

Reconfigurable chips enhance everything from Azure SDN to Bing

April 25, 2018

Like a growing number of Microsoft services, the Bing search engine uses Azure’s FPGA platform. It’s used it most recently to accelerate generation of “intelligent” answers that include auto-generated summaries from multiple text sources using machine reading comprehension.

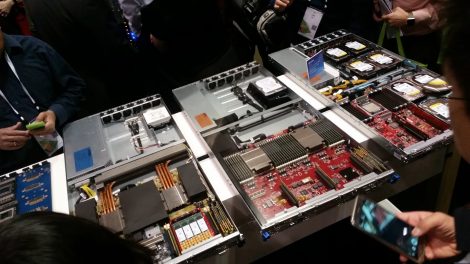

That software runs on an FPGA-powered system codenamed Project Brainwave. The same FPGA platform is used for Azure’s software-defined networking, delivering smart NICs. It also powers the machine learning Cognitive Services Microsoft offers as APIs for image, text, and speech recognition and will in time be available for customers to run their own workloads.

“We built a very aggressive FPGA implementation, so we could really drive up the performance and the size of models we can deploy and then be able to serve it at low latency but at a great cost structure too,” Microsoft distinguished engineer Doug Burger told Data Center Knowledge in an interview. “When we convinced Microsoft to make this major FPGA investment, it was not because we wanted to do one thing, but because we wanted to have a comprehensive cloud strategy for acceleration across many domains to move us into this new era.”

FPGAs are now in every new Azure server, he said. They enable Microsoft’s “configurable cloud” concept and help the company infuse AI throughout its products and services.

For Bing and Cognitive Services, Brainwave delivers what Burger called “real-time AI” by running machine learning models that even GPUs couldn’t run at the speed required, he said. It uses pools of FPGAs allocated as a shared hardware microservice that any CPU in the data center can call, as opposed to limiting a server to dedicated accelerators plugged into it.

“If I take a CPU and I hang an FPGA on it that's isolated over PCIe, and I have a high-bandwidth stream going somewhere in the cloud, just getting it into the server, and processing it, and buffering it, and getting it across PCIe, and bringing it out [takes time]. There are certainly use cases where that's OK if you have a VM and you want to pump data in and get the response back, but from a hyper-scale cloud architecture perspective this is really limited.”

‘Really Crazy Low Latency’

The real-time AI concept is important, he said, because often, a processing request gets delayed while the system is waiting for other request to bundle them all together. “I think a big differentiator we have is when we get a request, we throw the whole machine at it; if there's a queue, we just rattle through them, one after another, rather than doing the batching and lumping together to get everyone's done at once.” Think of it as the difference between being the second person in the queue at the bank, and having to wait for all 20 people in the queue to fill out the form and hand in the form, Burger suggests; with batches, “you have to wait for the last person in the line to finish even though you're second.”

Because they’re connected directly to the NIC in the server and the top-of-rack switch, as well as to the CPUs in each server, up to 100,000 FPGAs in one data center can communicate with each other in about ten microseconds (that’s two microseconds per switch hop).

That means a machine learning model can be scaled across multiple FPGAs. “Not all customers need those super low latencies, but the thing that’s a real differentiator for us is we're offering that really crazy low latency, that single request throughput with no compromises on performance.”

‘Spatial Computing’

Like GPUs, the FPGAs use what Burger calls “spatial computing.” Instead of keeping a small amount of data in a CPU and running a long stream of instructions over it (which he calls the “temporal computing model”), the machine learning model and parameters are pinned into the high-bandwidth on-chip memory, with lots of data streaming though that.

“GPGPU is the observation that we have this very discrete architecture for graphics, and maybe if we add a few features to it; then there are other problems that map nicely onto that. Now, what we’re trying to do is also broaden the architectures and make them less narrow through programmability.”

FPGAs are more efficient at this than GPUs because they can be programmed with only the instructions needed to run this kind of computation (machine learning-specific processors, like Google’s TPU, are optimized in similar ways), but they won’t be the only way to do this. “There are lots of workloads that map onto this very nicely, and there will be lots of hardware implementations underneath that will drive it,” Burger predicts. “I'm sure there ultimately will be lots of variants of spatial computing that won't be FPGA in the cloud as well, but this is a way to get started and bootstrap all these different functions.”

Spatial computing will be useful for more than networking and machine learning, Burger maintains. “If you think about the cloud and big data, a lot of it is about processing streams and flows of information at very high line rates. That's why you want a spatial architecture, and you want it everywhere, in every server you have, to do this, whether it's intrusion detection or encrypting high-bandwidth streams, or network streams, or storage streams, or applications, or deep learning streams, or database streams -- take your pick.”

Microservices and PaaS architectures like serverless and event-driven computing can also take advantage of spatial computing. “The bandwidths have exploded; the latencies have been plummeting like rocks. People have gone to service-based architectures, and all of those make some sort of centralized spatial architecture really important, because you want low latency, you want that high throughput, you want to be able to service a request without going through some slow software stack like TCP/IP. The workloads and the capabilities and the global architecture are all pushing in a direction where you need both capabilities, both types of architecture.”

Streamlining FPGAs for Cloud Scale

FPGAs are usually viewed as a pricey and complicated option. Just specifying the hardware you need used to take a lot of expertise. The FPGA platform in Azure delivers an order of magnitude more performance than CPUs at less than a 30-percent cost incfdrease and uses no more than 10 percent more power.

Microsoft has also managed to reduce the complexity of managing and programming these FPGAs, according to Burger.

“One of the biggest challenges we had initially was operationalizing FPGAs at worldwide scale,” he said. “You want the drivers to be crazy robust, the system architecture should be very stable and very clean to manage.”

Burger’s team writes its FPGA images in Verilog, but the network architecture simplifies deployment. “You’re not wrestling with PCIe. We’ve brought that [complexity] tax way down, and with a lot of the high-level synthesis that we’re doing, the goal is to bring that tax down further. It's slow progress, but we're getting there.”

Rather than presenting developers with custom accelerators to code against, the team creates custom engines in Verilog that have their own programming interfaces. “For Project Brainwave, if you want to run a deep learning model, you're compiling a threaded vector operation with a compiler, and that's fed to our Brainwave engine, and it runs it, and you don't need to know a single thing about Verilog or FPGAs.”

Each service that uses the FPGAs gets its own engine, making it look to the software like a custom processor. Send a single instruction to that engine, and it turns into a massive amount of parallel computation. “We hit 40 teraflops with a single request,” Burger says. “We issue one instruction which is a macro instruction to do a lot of matrix vector math, and it runs for ten cycles, and each cycle we're sustaining 130,000 separate operations on the chip. Over a million operations get launched because of that one instruction, and there's a massive fan-out network, so 130,000 mathematical units are lighting up at once.”

That means using FPGAs for workloads will be much simpler, because developers will work at a higher level of abstraction than the Verilog used to program the FPGAs and might not even know their code is running on an FPGA.

Heterogeneous hardware makes sense even in hyper-scale cloud environments that used to get their efficiency by having identical hardware to simplify operations and deployment, because “the scale has become so big that these heterogenous buckets [of compute] are really large too,” Burger explained. “It’s all about economics: what is the non-recurring engineering cost to build and maintain some new hardware accelerator, at what scale do you deploy it, what's the demand, how valuable is the workload, what’s your capacity for handling [however many] of these different accelerators at a given point in time?”

FPGA’s at the Edge?

So, will FPGAs make sense for edge computing, perhaps in some future version of an Azure Stack system to power Azure services on-premises? Again, suggests Burger, “It's just economics; how fat is the plug in the wall, how big is your battery, how big is your model, how much money are you going to pay in silicon? It’s just finding those scenarios and those constraints, and then we know what to do. I think a lot of the learning we've had in the cloud with this rapid innovation cadence will apply to the edge as well.”

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)