Nvidia's Latest Tesla T4 GPUs Live in Beta on Google Cloud

In addition to now offering NVIDIA's latest data center GPU, the cloud company has expanded the locations where GPU's are available.

January 18, 2019

Google Cloud Platform said this week that Nvidia’s newest data center GPU, Tesla T4, is now publicly available in its availability regions in the US, Netherlands, Brazil, India, and Japan. This is the first time the cloud provider has offered GPUs in the latter four markets. It's a beta offering, so it might not be quite ready for prime time yet, but it gives users with workloads that might benefit from the technology a chance to try the T4 on for size.

In November GCP became the first cloud vendor to offer the next-generation T4 silicon as a private alpha, adding the processor to a list of Nvidia GPU offerings already in its portfolio, including K80, P4, P100, and V100.

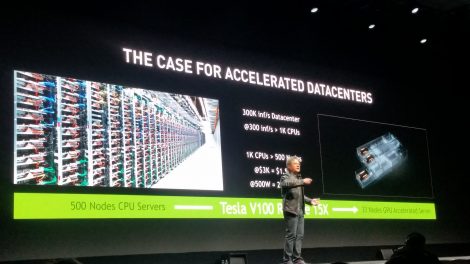

GPUs, once used almost exclusively to process images headed for a monitor, are increasingly being deployed in servers to accelerate workloads. They're the favorite workhorse for training deep learning algorithms, as well as for inference and more traditional data analytics. They're used to offload processing tasks from the CPU, freeing up CPU capacity to do more.

By being the first to market with Nvidia's latest and greatest, GCP likely hopes to get a leg up on Amazon Web Services and Microsoft Azure, it's two largest rivals. With AI and machine learning continuing to take a front-and-center position in the IT arena, computing muscle for those workloads has become an essential path to staying ahead of the curve for cloud providers.

"The T4 joins our Nvidia K80, P4, P100, and V100 GPU offerings, providing customers with a wide selection of hardware-accelerated compute options," Chris Kleban, product manager at GCP, said in a statement. "The T4 is the best GPU in our product portfolio for running inference workloads. Its high-performance characteristics for FP16, INT8, and INT4 allow you to run high-scale inference with flexible accuracy/performance tradeoffs that are not available on any other accelerator."

Here's how the T4 compares to other Nvidia GPUs:

Unlike the V100 (hyped by Nvidia as "the most advanced data center GPU ever built to accelerate AI, High-Performance Computing, and graphics"), which was specifically designed for AI, the T4 is based on the architecture used for Nvidia's consumer-focused RTX cards and is something of a general-purpose GPU. However, it's optimized for inference workloads, and Nvidia calls it "the world's most advanced inference accelerator."

It can also be a money saver when combined with the right workload. Utilizing the T4 in Google's cloud is much cheaper than using the more powerful (and more power-hungry) V100, with prices for the former starting at $0.95 hourly compared to $2.48 for the latter. Although the V100 is generally much faster, the T4 can be faster when running inference.

According to Google, the T4's 16GB of memory makes it capable of supporting large machine learning models or of running multiple smaller models simultaneously. Users can create custom VM shapes that best meet their needs with up to four T4 GPUs, 96 vCPUs, 624GB of host memory and optionally up to 3TB of in-server local SSD.

Google, of course, wants to make it easy for new customers to get on board.

"For those looking to get up and running quickly with GPUs and Compute Engine, our Deep Learning VM image comes with Nvidia drivers and various ML libraries pre-installed," Kleban noted.

The company has even posted an online tutorial demonstrating how to deploy a multi-zone, auto-scaling ML inference service on top of Compute Engine VMs and T4 GPUs.

Read more about:

Google AlphabetAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)