LinkedIn, a Data Center Innovation Powerhouse, Is Moving to Azure

As the unique system LinkedIn engineers built over the years gets replaced with a public cloud, how much of the innovation will Microsoft retain?

A short, carefully worded statement published Tuesday on LinkedIn’s engineering blog from the social media site’s senior VP of engineering Mohak Shroff revealed his site’s intent to begin transferring its workloads to Azure, its parent company Microsoft’s public cloud, over a multi-year period.

Shroff did not share any details about the upcoming move, but it will apparently bring to an end the company’s venture into designing and operating its own self-healing data center infrastructure — a project which, up until recently, seemed to be going well.

“In recent years we’ve leveraged a number of Azure technologies in ways that have had a notable impact on our business,” Shroff wrote. “That success, coupled with the opportunity to leverage the relationship we’ve built with Microsoft, made Azure the obvious choice. Moving to Azure will give us access to a wide array of hardware and software innovations, and unprecedented global scale.”

Shroff’s announcement would appear to bring to a premature close to his company’s efforts to produce and perfect a working model for self-healing infrastructure — a design that uses machine learning at a very low level to maintain optimal traffic levels, originally dubbed “Project Inversion.”

“Our multi-year migration will be deliberate,” a LinkedIn spokesperson told Data Center Knowledge. “We’ll begin with a period of infrastructure prototyping, design, build, and testing. This will ensure that we’re both ready for eventual migration with minimal impact on members while building our operational muscle for Azure. . . Our long-term goal is to move all aspects of LinkedIn’s technology infrastructure to Azure. However, [it’s] important to remember this is a multi-year journey, and we will continue to rely on and invest in [the current LinkedIn] data centers as our scale continues to grow throughout this process.”

The Road Almost Traveled

LinkedIn’s existing data center infrastructure is the product of unique and trailblazing engineering, whose details its creators haven’t been shy about sharing publicly.

In an August 2018 presentation for a VMware-sponsored partner event in Las Vegas, LinkedIn’s senior director for global infrastructure, Zaid Ali Kahn, happily told invitees the full story of the system they were building — one he believed would be the model for large, dynamically-scaling communications systems everywhere.

“The coolest thing that we’re doing is building a network, building towards a self-healing infrastructure,” Kahn told invitees. “That means, a network infrastructure that’s designed from the ground up so that it auto-remediates and heals itself. But it’s a journey to get there.”

At the time – about a year ago – Kahn said his team had just reached the point where it was feasible to begin implementing the vision, which was: “Inside the data center we don’t want network engineers to be installing hardware, configuring hardware. We want data center technicians to just simply put a switch in the network, turn it on, and the switch figures out how to connect itself and say, ‘Hey, I’m this kind of switch.’ We want our network engineers to be designing and writing code.”

Zaid Ali Khan, LinkedIn's senior director for global infrastructure, speaking at VMworld 2018

The principal goal was to “kill the damn core,” he said. The team adopted a flattened, “single-SKU” architecture, meaning that no matter how it’s perceived or what’s perceiving it, the entire structure is treated as a single layer. It was designed from the ground up to be a 100 Gbps network fabric with near-zero latency and bandwidth always available.

Getting to this point required radical disaggregation, specifically weaning the network software from network hardware (disaggregation is a key architectural principle for Azure and all hyperscale infrastructure). Specific switch brands promised disaggregation, but at the cost of becoming beholden to their manufacturers. They also typically use old protocols like SNMP for oversight. LinkedIn’s architects wanted the freedom to use select chipsets for certain routing requirements and retain full insight into, and control over, the control plane.

LinkedIn’s engineers wanted to “modernize our routing protocols so that we can do better forwarding, we can do link selection a lot easier, use routing protocols like ISIS — complete route flooding… And we want to be able to also have topology-aware stacks.”

As part of that effort they made the then-revolutionary decision to implement Apache Kafka — a messaging queue typically used in Big Data deployments — in place of SNMP and traditional Syslog repositories, as their network’s message bus. That bus would move, by Kahn’s estimate, about 1.4 trillion telemetry messages per day.

“We take all of the data and stream it somewhere else,” he said. “The beauty of it is we can do whatever we want with the data. We can go into the chipsets, we can pull everything out, and we want to be able to transport all of this… It basically opens your world. Now you’ve got your Kafka agent on the switch, and then you send it to your Kafka broker, and then you have a world of opportunities for developers to write machine learning (ML) techniques and build the most awesome monitoring and management software that we want, tailored to our environment.”

Last October, LinkedIn provided further detail on implementing ML at the network level. It introduced developers to Pro-ML, its engineers’ own machine learning stack that used Kafka to stream network data into neural network models. For example, those models could direct a distributed blob storage system called Ambry to provide as-needed storage structures of essentially limitless size to facilitate data streams between network nodes.

Parallel Courses

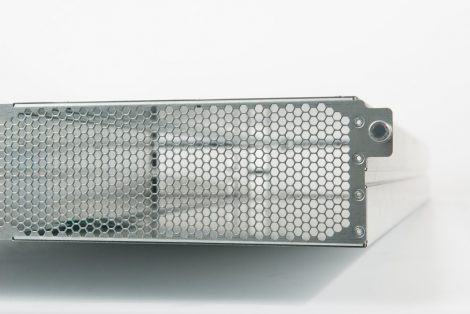

It was all an ambitious effort, the fruits of which LinkedIn gave every indication it was willing to share with the rest of the world. That has been after all the company’s pattern. When it pursued its own 19-inch standard for mounting and operating equipment in its own data centers, it published that standard as Open19 and later contributed it to the Open Compute Project, even though OCP’s Open Rack standard (upon which Azure data centers are based) specifies 21 inches. Just this past May, LinkedIn principal engineer Yuval Bachar — who leads the Open19 Foundation — promised his fellow members that 2019 would be the “year of adoption” for the standard in data centers other than LinkedIn’s.

While Azure and LinkedIn design foundations did diverge, there were some parallel paths. For example, when LinkedIn decided it needed its own network operating system, which Kahn described as doing two or three things well instead of nineteen or twenty, it chose SONiC, Microsoft’s own Linux derivative originally built for its own Azure hardware and then released into open source. Earlier this year LinkedIn engineers at the OCP Global Summit 2019 remarked on how SONiC gave them ownership of their network software stack. Among other things, it meant freedom to choose whatever hardware is best suited for each single purpose.

“When we picked SONiC,” LinkedIn software engineer Zhenggen Xu told attendees, “we actually did a very deep analysis about SONiC itself, not just blindly choosing it.” As a containerized OS, it gave operators the freedom to swap out and upgrade kernels without causing detrimental effects to the network, while architects could pick and choose the components that best solved the problems they faced at the time. The network could grow incrementally and under full control, not in fits and starts once every few years.

But perhaps it was just a matter of time before the parent company would decide not to continue building in two directions.

“I suspect the respective management teams saw little value in maintaining parallel infrastructure engineering paths,” Kurt Marko, principal analyst with Marko Insights, wrote in a note to Data Center Knowledge.

“As with all mergers, there are always egos involved, with each side preferring their implementation, more out of familiarity than technical merit,” he continued. “Such emotion aside, I suspect that any unique LinkedIn technology delivering features Azure couldn’t match will be, or has been, incorporated into the relevant services. More broadly, LinkedIn is a software-based service, and its efforts are better focused there, not on infrastructure scaling and management. I suspect its ongoing relationship to the Azure team will be similar to that of other SaaS teams at Microsoft, like the Office 365 and Dynamics 365 business units.”

Now that LinkedIn’s applications and workloads will be moved to Azure, albeit over a period of time being described in years, the fate of all these architectural innovations may have come to a standstill. Whether anyone — LinkedIn, Microsoft, OCP, the broader data center community — will ever be able to reap all the fruits of LinkedIn’s long labors appears to be up in the air. For all the work Kahn and his colleagues had said they were accomplishing to be replicated on the Azure network stack, it’s a safe bet that LinkedIn itself may have to be reinvented.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)