When Air No Longer Cuts It: Inside Google’s AI-Driven Shift to Liquid Cooling

The company’s latest AI processors drove its data center power density to unprecedented levels.

Joe Kava saw a lot of liquid cooling solutions over the years – servers dipped into baths of mineral oil, HP printer inkjet heads spraying dielectric vapor over CPUs, and everything in between – and he was never a big fan, he said.

Kava has spent the last 10 years or so running all things data center design and operations at Google, where using most liquid cooling solutions on the market would only get in the way. These computing facilities are in a permanent state of change.

“You’ve got row, upon row, upon row of just racks, filled with our servers. And our hardware operations team are in there every day, upgrading, repairing, deploying … constantly,” Kava, Google’s VP of data centers, said in an interview with Data Center Knowledge. “Imagine if you had all of those servers soaking in hundreds of thousands of gallons of mineral oil.” Day-to-day operations would get a lot more complicated.

Kava’s skepticism wasn’t limited to full-immersion solutions. At one point, his team conducted a peer review of sorts for Stanford University, which was preparing to build a liquid-cooled high-performance computing center and needed some outside experts to review the designs submitted to the university by architects and engineering firms. (Supercomputers are usually cooled with liquid coolant brought directly to chip heatsinks via pipes.)

“It was all pretty good stuff, but you know, to me, there’s very few applications” that require processors to be so close to each other that the overall power density is higher than cold air can handle, he said. “We’ve experimented in labs with all kinds of cooling solutions, but for our large-scale deployments [liquid cooling] was never necessary.”

Floor space is generally cheaper than electrical infrastructure, and space is rarely an issue on Google’s massive data center campuses. “I always felt that you could just spread out more, and you won’t necessarily need liquid cooling.”

In Comes AI

Then came along an application that changed the game – in more ways than one.

About three years ago, Google started deploying in its data centers processors it designed in-house to handle its Artificial Intelligence and machine-learning software. At first, the company used its Tensor Processing Units, or TPUs, to make its own services like Search or AdWords more intelligent, but later started renting them out to its cloud infrastructure services customers, so they too could supercharge their applications with AI.

Sundar Pichai, CEO of Google’s parent company Alphabet, first mentioned TPUs publicly at I/O, the company’s big annual developer conference in Silicon Valley, in 2016. He announced the next generation, TPU 2.0, at I/O 2017, and at this year’s conference, in May, he unveiled TPU 3.0 – a TPU eight times more powerful than its first version.

TPU 3.0 was so powerful – and power-hungry – that Kava’s team did not see a viable way to cool it with air. “First two generations could get by with just our traditional air cooling, but not the third generation,” he said.

Ever since TPU 3.0 was introduced internally, Google data center engineers have been busy retrofitting infrastructure to accommodate direct-to-chip liquid cooling – and they’ve had to move fast.

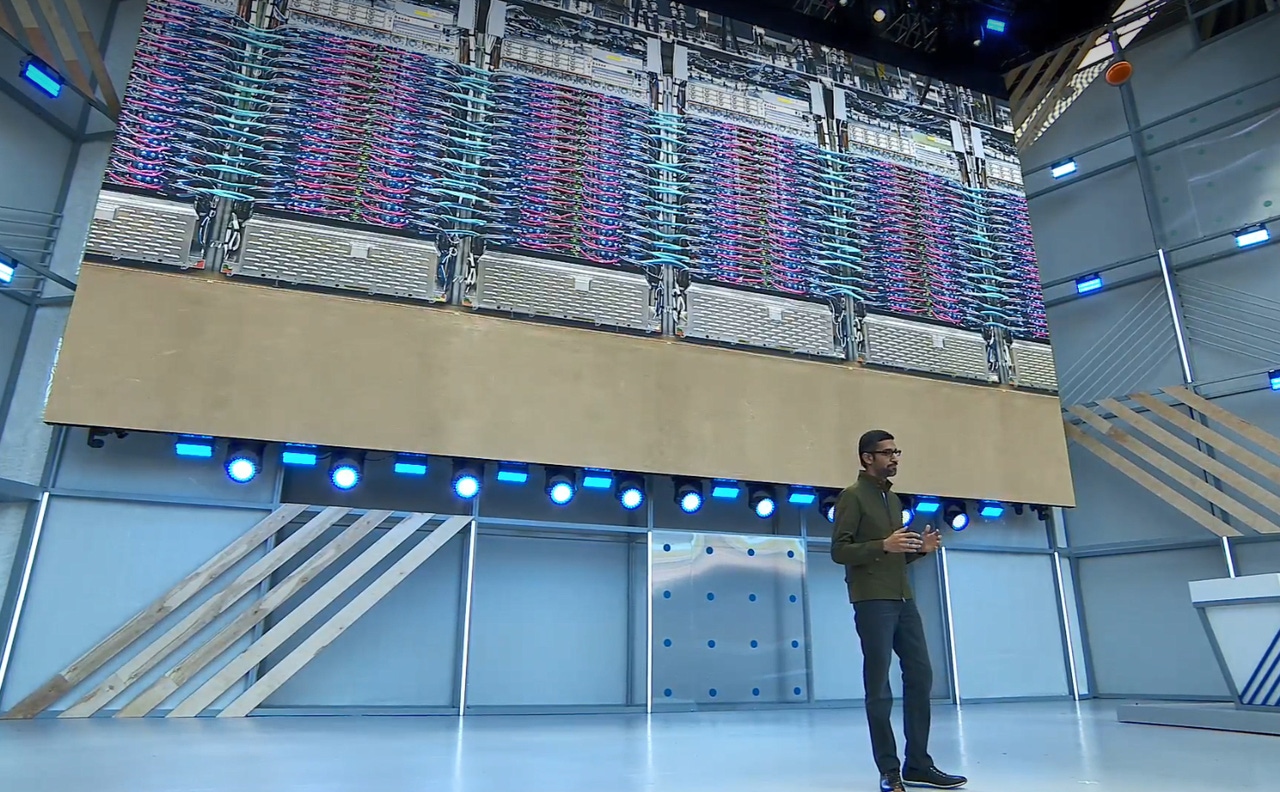

Row of liquid-cooled TPU 3.0 pods inside a Google data center

Like it’s done with the first two generations of TPUs, Google Cloud is offering TPU 3.0 as a service, and the service has been popular with customers. The rapid ramp-up of cloud machine learning has driven the data center team to expedite conversion of the cooling infrastructure.

Liquid cooling is now being deployed in many Google data center locations. Eventually, Kava expects nearly all the company’s data centers to support TPU 3.0, but “you just can’t do it overnight.”

Design Was ‘Mostly Baked Already’

As these things tend to go at Google, its data center infrastructure R&D team started designing a liquid-cooling solution years ago, before anyone knew it would be necessary – just in case it would be.

They wanted to have a solution in case the Platforms group developed a chip that couldn’t be cooled with air. “They had this mostly baked already,” Kava said about the liquid cooling system.

Every component within Google’s infrastructure, from servers (and now also chips) to data center buildings, has traditionally been designed for maximum integration throughout the stack. Recently, this philosophy was taken a step further. About 18 months ago, the Platforms group, which designs the hardware, merged with Kava’s data center engineering team, forming a single R&D organization. That means computing platform development and data center design are now more tightly coupled than ever at the company that’s built the world’s largest hyperscale cloud.

Two Cooling Loops

The way Google’s current air-based cooling system was designed, combined with the way the liquid-based solution was put together, meant that it was “easy…ish for me to retrofit data centers for that,” Kava said.

The new cooling design is a closed-loop system. It consists of two cooling loops, one circulating dielectric (non-conductive) fluid, the other running regular chilled water.

The first loop brings the dielectric coolant to TPU heatsinks, extracts heat, and carries it to a heat exchanger. That’s where heat is transferred to the chilled-water loop. What happens upstream from that point is the same as in the air-based system: heated water goes back to the facility’s cooling plant and eventually makes its way to the cooling towers outside, which finally expel the heat into the atmosphere.

Fan coil units in Google’s current cooling design were relatively close to the racks. They already had chilled water flowing through the copper coils, so it took minimal modifications to the existing pipe network to accommodate another loop of heat exchangers, Kava explained.

Easy Adjustment for Operations

While the data center operations teams had to go through a learning curve as liquid cooling was brought in, it wasn't a steep learning curve, he said. The adjustment was primarily around additional monitoring, such as new leak-detection censors installed in the TPU racks.

But again, the cooling fluid that enters the servers is dielectric, so even if there is a leak, it doesn’t pose much risk, Kava said. On the chilled-water side, it’s business as usual.

The data centers that have been equipped with liquid cooling do use marginally more water than before, but the increase is negligible, he said. The cooling plants don’t have to be modified, and it only takes a slight increase of water flow, well within the existing pump headroom.

Optionality

Asked whether he thought liquid cooling would someday be necessary for non-TPU applications, Kava said, “I won’t say never. It’s plausible, but there would have to be a good business driver for it.”

Installing liquid-cooling loops in data center rows does increase cost. “It’s not a ton (of additional cost), but we’re pretty laser-focused on keeping our cost structure as competitive as it possibly can be,” he said. “If I’m going to add any cost at all – (even if) it’s just a few cents per watt – there has to be a good return on that.”

Intel’s server chips, which are still the primary workhorses inside Google’s data centers (and most other data centers), don’t need liquid cooling, even on their current, brawniest generation.

But preparing for things that may come without really knowing for sure that they will is built into Google’s data center engineering DNA.

“We call it optionality,” Kava said. “What is the future going to look like? Well, we don’t know necessarily. We have some directional idea of what the next couple years looks like, but things can change, and sometimes they change pretty quickly, so you try and have optionality that allows more flexibility without spending a ton.”

Read more about:

Google AlphabetAbout the Author

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)