Insight and analysis on the data center space from industry thought leaders.

The Evolution of High Availability

The traditional view of designing for and achieving high availability systems has been concentrated on hardware and software, writes Dr. Terry Critchley. However, people have quickly realized that human error plays a large role as well.

March 17, 2015

This article has been updated in January 2022.

Dr. Terry Critchley is a retired IT consultant and author who’s worked for IBM, Oracle and Sun Microsystems.

Surely, I hear you say, availability requirements do not change except for striving to achieve higher availability targets through quality hardware and software. In true British pantomime tradition I would say, "Oh yes they do!" And I'm guessing you would like me to prove it!

The traditional view of designing for and achieving high availability systems has been concentrated on hardware and software, although recently people have recognized the importance of 'fat finger' trouble causing outages. This is attributed to people (liveware) errors which cause outages or incorrect operations which can be construed as taking the system out. Such errors encompass such outages as entering the wrong time of day or running the jobs in the wrong order. This latter type of finger trouble brought down the London Stock Exchange on March 1, 2000. Although the reason is unspecified, I suspect a leap year issue between the two systems - one recognized February 29, the other didn't.

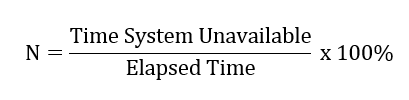

If we can minimize the hardware and software issues and reduce finger trouble by rigorous operations procedures (leading to autonomic computing) then the problem is solved. Or is it? There are other factors which are either not recognized, understood or even thought of. Availability is usually defined as:

where full availability is represented by 1 or 100 percent. In addition, non-availability (N) = (1 - availability) x 100 percent, which is often expressed as a time (seconds etc.).

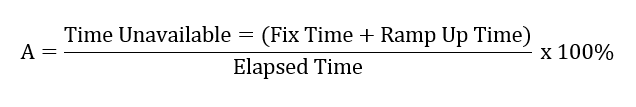

This is often a myth since the restoration of a failing piece of hardware or software does not mean the affected service is available at the same time. There are often other recoveries required, such as reconstitution of RAID arrays or recovery of a database to some predefined state. This latter recovery I call ramp up time which is additional to the time taken to fix/recover failing hardware and/or software. This can be less than, equal to or much greater than the hardware/software fix times.

This equates to the fact that non-availability (N) is really expressed by the equation:

This is a point often overlooked (deliberately or unintentionally) when stating availability percentages and times. A failure with a two minute fix time but a ramp up time of 120 minutes will blow any desired or offered 99.99 percent availability out of the window for several years.

One example is a U.S. retail company which suffered an outage of its system a year or so ago. The problem was fixed in about an hour but they estimated that recovering the database to its original state would take several hours. This correct working state of a service is normally dictated and specified in a Service Level Agreement (SLA) between IT and the business. The business user is not really interested in the use of a duplicate network interface card to solve a server problem; he or she is interested in getting the service back as it was before the failure.

The next and possibly newest outage generator are security leaks in the broadest sense. Most publications on high availability concentrate on the availability of the system whereas the availability of the business service is key.

Some years ago, Sony estimated the damage caused by the massive cyberattack against Sony Pictures Entertainment was $35 million. Gasps all round. Today, this happens to many organisation with eye-watering financial and lost time consequences, often due to ransomware attacks.

TeamQuest developed a Five-Stage Maturity Model which they have applied to service optimization and capacity planning, along the lines of the older Nolan curve of the 1970s. In this model they specify, among other things, the view taken by an organization in each of the five stages. These are summarized in the table below.

Many availability strategies lie in the Reactive column, dealing with components; some are in the Proactive column where operations and finger trouble are recognized. I believe that organizations ought to migrate to the Service column and then the Value column. The key areas to design, operate and monitor in moving up the stages are:

Hardware and Software - design and monitoring

Operations - operational runbooks and root cause analysis updates

Operating Environment (DCIM) - data center environment

Security - malware vigilance

Disaster Recovery - remote/local choice depending on natural conditions (floods, earthquakes, tsunami, etc.)

The viewpoints of interest are the end user of the IT service and the IT person's view of the system. A computer system can be working perfectly yet to the end user be perceived as not available because the service he uses is not available. How can this be?

The simple reason is that there are several types of outages which can impact a system or a service. One is a failure of hardware or software so that an application or service is unavailable: this is a physical outage. The other outage is a logical outage where the physical system is working but the user cannot access the service properly, that is, in the way agreed with IT at the outset. This view of an outage is the one that matters, not that of the IT department.

I witnessed one case in an account of mine where the users swore they were offline for a day when the IT department swore that everything they controlled was working fine. It turned out that the new LAN loop (Planet tradename) the users were attached to was down and IT had no way of observing its status, proving the availability maxim: ‘If you can’t see it, you can’t manage it’.

Moral

"There are more things in heaven and earth, Horatio, Than are dreamt of in your philosophy," said Shakespeare's Hamlet.

This rule applies to high availability, today considered a subset of organisation Resilience.

Dr. Terry Critchley is a retired IT consultant and author who’s worked for IBM, Oracle and Sun Microsystems with a short stint at a big UK Bank.

Books by Terry Critchley

Open Systems: The Reality

BCS Practitioner Series ISBN 0-13-030735-1

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)