NVIDIA’s AI Supercomputers Help ‘Augment’ Human Site Inspectors

GE-backed startup Avitas partners with GPU leader to automate industrial inspections

Avitas Systems, a GE Venture startup, has started using the combination of robotics, AI, and advanced data analytics to automate safety inspections in the oil and gas, transportation, and energy industries – and it’s all powered by NVIDIA’s purpose-built AI supercomputers.

NVIDIA will help Avitas automate inspections by using drones, robotic crawlers, and autonomous underwater vehicles to inspect sites and capture data and video footage, the companies announced today.

The Boston startup is taking advantage of NVIDIA’s GPU-powered DGX AI supercomputer systems in several ways: to design 3D models of the “assets” or equipment that needs inspection, to build flight plans for the drones and to navigate other robotics to do the inspections, and to create and run algorithms to analyze the data and find defects, said Alex Tepper, founder and head of corporate and business development at Avitas.

“We are using artificial intelligence to do what we call automated defect recognition, which interprets the sensor information from the robots to detect defects of various kinds,” Tepper said. “Things like corrosion, hot spots, cold spots and micro-fractures.”

After an inspection, the data gathered by a drone or another device is then transmitted to a central database, where it’s analyzed with past inspection, maintenance, and operational data to determine the severity of the defects found, he explained.

What’s the upshot? The technology results in safer, more precise inspections, including the ability to find defects that are not detectable by the human eye. Historically, companies in the oil, gas, transportation, and energy industries have manually performed safety inspections by walking around facilities with sensors, riding in trucks looking up at equipment, riding helicopters looking down, or even using a harness and climbing up a piece of infrastructure, Tepper said.

Automation can cut inspection costs by 25 percent. For example, a typical mid-size refinery that used to spend $4 million for an inspection can use Avitas’s services and technology and do it for $3 million, Tepper added.

Alan Lepofsky, VP and principal analyst of Constellation Research, said Avitas and NVIDIA’s announcement is an example of the positive things AI can do, as opposed to the dystopian fear that AI will put humans out of work.

“Here’s an example where using AI will make the process safer for humans to fulfill their work,” he said. “The idea [is] that robotics or drones can go to places that humans can’t, and AI can analyze the images that they are sending back, and then engineering decisions can be made. That’s not replacing humans. That’s augmenting humans.”

Avitas, which launched in June, has been working on its technology for the past 12 to 18 months, Tepper said. The company is using two models of the DGX-1 supercomputers – one that can perform at 960 teraflops and another that reaches 170 teraflops, according to an NVIDIA spokesperson. He declined to say how many NVIDIA supercomputers the company has deployed or how many customers Avitas has.

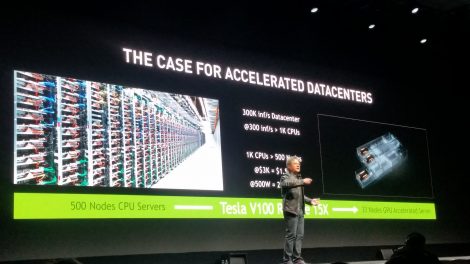

The DGX-1 is housed in a data center and features a complete software stack that includes an integrated operating system, middleware components, and the software tools that allow Avitas’s developers to write their algorithms, said Jim McHugh, general manager of DGX Systems for NVIDIA.

Avitas also uses the DGX Station, a desktop supercomputer that packs the power of half a row of servers in small form factor, McHugh said. This allows the company to power the automated inspections in remote areas, where it’s hard to get good network connections, such as oil rigs in the middle of the ocean, or facilities in the desert.

“It’s too expensive to do processing of data and moving it back and forth, so by having the equivalent of half a row of servers out in the field is the advantage of it,” McHugh said.

Avitas’s master algorithms and models run on a DGX-1 supercomputer in a data center. The more data is collected and fed into the system, the smarter and more accurate the models become in detecting defects, he said. The data from the DGX Station in remote sites can later be updated to the central data center to build better models, he said.

About the Author

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)