It was a bright spot in an otherwise dismal market update for data center servers, produced earlier this month by Omdia: While the research arm of Informa Tech downgraded its worldwide server shipment forecast for 2023 a second time this year, to an 11% year-over-year decline, it has seen unprecedented growth in liquid cooling deployments.

"Preliminary data center cooling equipment data points indicate a sharp increase in liquid cooling deployments," reported Omdia's Cloud and Data Center Research Practice Director Vlad Galabov at the time of the report's release, citing figures supplied by thermal management equipment vendors. Some vendors, who had already reported thermal equipment sales increasing as much as 20x (that's not a typo) in the second half of last year, saw continued increases of more than 50% in the first half of 2023.

Omdia's data would appear to contradict last month's annual survey analysis from Uptime Institute. Uptime spoke of declining interest among facilities managers and designers in deploying liquid cooling, at least at significant scale, in the near term.

Yet there's a common thread that explains both observations, according to Galabov and his Omdia colleague, Data Center Compute & Networking Principal Analyst Manoj Sukumaran. In separate notes to Data Center Knowledge, Galabov and Sukumaran told us that data center operators and builders (with the exclusion of certain hyperscalers, curiously enough) are concentrating their investments on higher-density equipment earmarked for AI workloads. These are the precisely the class of server that should be choice candidates for liquid cooling deployments.

Bifurcation

Here's the emerging phenomenon: These higher-density servers are naturally more expensive, which would explain the rise in average unit prices (AUP) for shipped servers. But operators don't need as many of them (of course, since they're high-density units), which is what's driving down shipment numbers. However, with these new units likely to be dedicated to AI workloads, operators' investments in liquid cooling could be confined to these AI subsystems. And that would explain the rising demand for higher-efficiency, air-driven cooling systems.

"The power density of racks doing general purpose computing and storage at the data centers of cloud service providers is 10 - 20 KW," reads the summary of Galabov's report. "By comparison, the power density of racks computing AI model training always exceed 20 KW."

You'd think this is the contingency for which hyperscalers have already planned. As it turns out, Meta, owner and operator of Facebook, operates facilities that were not optimized for liquid cooling, according to Sukumaran. As a result, the best Meta can pull off is air-assisted liquid cooling (AALC). Co-developed with Microsoft, AALC is a concept Meta engineers have been touting since before the COVID-19 pandemic as an innovation capable of stretching the capabilities of air cooling in more conventional facilities.

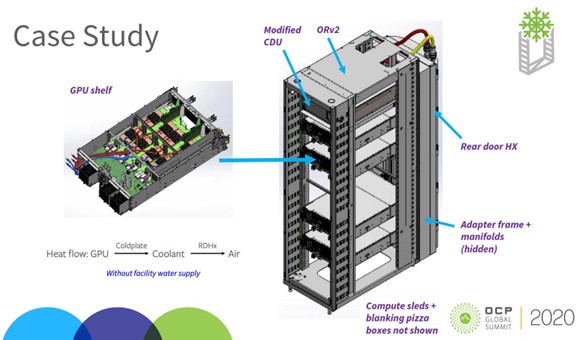

An air-assisted, liquid-cooled Olympus RackServer chassis, first proposed at OCP Virtual Summit 2020.

First demonstrated back in 2020, AALC involves introducing closed-loop liquid cooling into air-cooled racks. Here, the liquid route starts with cold plates attached to the processors (in early tests, to GPUs), proceeds through rack-level manifolds, into a rear-door heat exchanger (HX), and a modified coolant distribution unit (CDU). Heat absorbed by the cold plates is exhausted through the HX, then through the existing outflow air current.

It's a liquid cooling attachment, most certainly, which qualifies as a direct liquid cooling (DLC) component for the purposes of manufacturing. But the end product, after it's installed, is officially an air-cooled rack. It explains why DLC deployments can grow, while air-cooled facilities stay pretty much as they are.

While Meta's facilities are equipped for AALC, Sukumaran told Data Center Knowledge, "it is not as efficient as blowing cold air from outside to cool down servers. The extra energy needed for the CDU and the large fans will slightly push up the non-IT power consumption impacting PUE."

When the Numerator and the Denominator Both Go Down

When the power usage effectiveness (PUE) metric was created, it seemed a sensible enough way to gauge how much of a data center's overall power was being consumed by the IT upkeep equipment. But as equipment maker Vertiv recently pointed out, a truly innovative cooling solution drives down both IT equipment power consumption and overall power consumption — the denominator and the numerator, respectively, in the PUE ratio. It's therefore entirely possible for the impact of a major innovation in both cooling and power to become entirely undetectable by PUE.

That would explain a phenomenon both Uptime and Omdia have seen: PUE trends remaining flat since the pandemic. Ironically, when PUE fails to fall, those same major innovations become more difficult to effectively market. (Never mind how often PUE has been declared dead.)

"I think PUE levels have already stagnated," Omdia's Galabov told Data Center Knowledge, "and I am not seeing the broad action needed to change them."

Uptime Intelligence Research Director Daniel Bizo told Data Center Knowledge that about one facility in five employs some degree of liquid cooling in its racks, with a majority expecting to have adopted DLC within five years. Whether most of those DLC-equipped racks will be officially categorized as air-cooled, however, depends on factors we may not have anticipated yet. While that's being sorted out, liquid cooling remains the predominant choice of fewer than 10% of operators Uptime has surveyed.

"On balance, Uptime sees continued, gradual uptake of DLC through market cycles in the coming years," Bizo said. "The strongest drivers are the proliferation of workloads with dense computing requirements, and increasingly the intertwined areas of cost optimization, energy efficiency, and sustainability credentials.

"Our data shows that very high-density racks (30-40 kW and above) are indeed becoming more common, but remain the exception. AI-development and AI-powered workloads will likely add to the prevalence of high-density racks, in addition to existing installations for technical computing such as engineering simulations, large optimization problems, and big data analytics."

Omdia's Sukumaran warns that AI may yet drive density trends in the opposite direction, especially if more facilities' AI subsystems remain officially air-cooled. "We are also seeing the rack densities shrinking because of high power-consuming GPU- and CPU-based servers," said Sukumaran. "So it could be a tailwind for more space and power capacity."

Level Off

Last month, HPE and Intel announced their joint participation in a chassis and rack standard they claim will extend the serviceable lifespan of air-cooled facilities. HPE's Cray XD220v server unit is about an inch-and-a-third wider than its ProLiant XD220n, enabling its 4th-gen Intel processors to be equipped with wider heat sinks — or wider cold plates. Addressing the fears of operators still worried about coping with dielectric fluids every day, the manufacturers advanced the claim that XD220v's performance increased only marginally (1.8% on average) when optional DLC cooling replaced conventional air cooling.

Of course, DLC has never been about improving processor performance — just the cost of it. A simple click on HPE's charts reveals DLC did indeed improve XD220v's performance-per-watt by almost 20% on industry-standard SPECrate, SPEChpc, and Linpack test batteries.

Yet it's obvious what message the manufacturers' marketing teams chose to highlight here: It won't be all that bad for you if you choose to put off your liquid cooling investments for another five years.

Meanwhile, thermal equipment maker JetCool Technologies has been touting its latest DLC technology. Its SmartPlate and SmartLid systems consist of cold plates that deliver chilled fluid directly to the surface of processors, through what JetCool describes as microconvective channels.

Citing Uptime data showing infrastructure improvement costs to be the primary deterrent in operators deciding to adopt all-out liquid cooling, a recent JetCool whitepaper co-produced with Dell argues that data centers have enough to worry about without introducing flooding to their list of worries.

"With a self-contained unit, the need for extensive infrastructure upgrades is eliminated," JetCool states. "The absence of complex plumbing and piping systems also minimizes the risk of leaks or system failures, potentially reducing repair and replacement costs, and containing potential leaks at the server-level, not rack- or facility-level."

It's a marketing message that appears to be tailored not only to its customers' immediate needs, but also their reluctance to acknowledge they have such needs. Try DLC out, the call to action goes. It'll probably help. And if it doesn't, what could it hurt? It's better than retrofitting all your facilities with fluid-flowing pipes if you don't have to yet.

"A wholesale change to DLC is fraught with technical and business friction," Uptime's Bizo told Data Center Knowledge, "largely due to lack of standards and a disjointed organizational interface between facilities management and IT teams. Air cooling meets operators' performance, risk, and cost expectations (even if it wastes power) for most use cases — and this includes the latest power-hungry chips from Intel, AMD, and Nvidia."

What's more, Bizo went on, the technical utilization levels of most data centers today remain low. There's plenty of room for more IT — which is why investments in AI-ready servers are rising, amid the overall decline in server-related spending. So there's no urgent need to drive up server density. As a result, Uptime perceives, "market adoption of DLC will not be evenly spread, and demand signals will be mixed."

"Ambitious projections of technology change in data centers," Bizo noted, "have a tendency to disappoint."

Editor's Note: Both Omdia and Data Center Knowledge are part of Informa Tech.