In February 2019 IBM launched the IBM Research AI Hardware Center in Albany, New York, meant to become the “nucleus” of a new, global ecosystem of research and commercial organizations collaborating to design computer chips optimized for AI.

On Sunday evening Pacific, aligned with kickoff of the Hot Chips conference, IBM unveiled the first piece of hardware to come out of the center: an on-chip AI accelerator for IBM mainframes. It promises to enable big mainframe users to conduct AI inferencing on the same system, even as it processes tens of thousands of transactions per second.

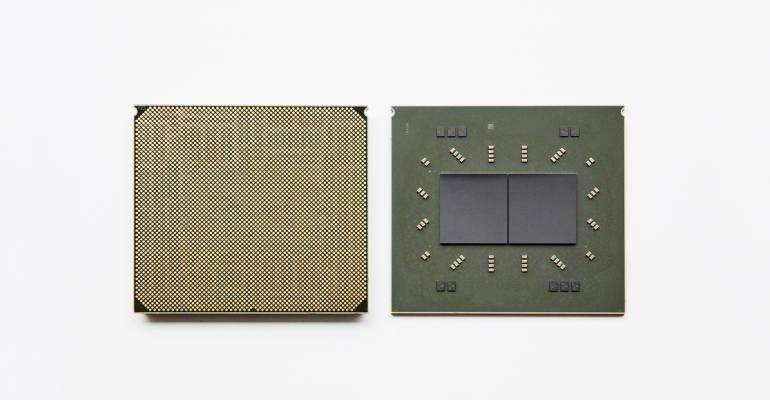

The accelerator is part of the new IBM Telum chip for its Z and LinuxOne mainframes, expected to hit the market in the first half of 2022. Telum also has a whole new cache design that improves performance by 40 percent, but the on-chip AI accelerator is the biggest new feature, said Christian Jacobi, an IBM distinguished engineer and chief architect of the Z processor design.

“With every generation, we come up with ways to improve scalability and performance, but then there are important features we add to each chip to not only drive speeds and feeds forward,” Jacobi told DCK in an interview.

The previous Z14 chip had encryption capabilities added, while the current Z15 chip also featured compression. “So there’s things like security, availability, and resiliency that we are improving with every generation. This generation we’ve invested in an on-chip AI accelerator, and it will enable our clients to embed AI inference tasks.”

IBM Telum Performs AI Inference as It Processes Transactions

That means companies can embed deep learning models into their transactional workloads and not only gain insights from the data during the transaction, but also leverage those insights to influence the outcome of the transaction, he said. For example, banks with mainframes running Telum will be able to detect and prevent credit card fraud.

“You can abort the transaction and reject it rather than deal with it after the fact,” Jacobi said. “You can imagine the business value that comes from that use case.”

The IBM Telum chip is meant for customers who want to use AI to gain business insight at scale across their banking, finance, trading, and insurance applications and customer interactions, the company said. Besides fraud detection, it can accelerate loan processing, the clearing and settlement of trades, anti-money laundering measures, and risk analysis.

The addition of AI acceleration in Telum is “very important and is perhaps the most significant enhancement to the Z processor family that I can remember,” said Karl Freund, founder and principal analyst of Cambrian AI Research.

Customers can do some machine learning on existing Z chips today but not the kind of deep learning inferencing that Telum enables, he told DCK. Today, if customers using IBM’s mainframes want to use deep neural networks, they must offload the data and compute tasks to separate x86 or IBM Power System servers, he said.

“Some companies are doing this, but it’s expensive, takes a lot of engineering work to set up, and the result is a fairly high-latency solution. You have to wait a long time to get the answer back because it’s a separate system across the network,” Freund said. “By having an AI accelerator on the Telum chip, you can do all that in a blink of an eye with no additional cost, very low latency, and no additional security risk.”

Offloading AI tasks to separate systems doesn’t work for complex mid-transaction farud detection because of the latency, according to IBM. These systems catch the fraud after it occurs. Telum enables real-time fraud detection and prevention, the company said.

Nanosecond-Range Speeds Via Direct Link to Cache in IBM Telum

IBM Telum has eight processor cores and a completely redesigned cache and chip-interconnection infrastructure, providing 32MB cache per core and scaling to 32 Telum chips, the company said.

“We deliver 40 percent more capacity per socket on the Telum compared to the Z15,” Jacobi said.

To enable fast AI processing during a transaction, the AI accelerator in Telum is directly connected to the cache, so that it can access the data with the same speed as the main processor – within nanoseconds. “It’s literally the nanosecond range for accessing the data as it flows through the transaction,” he said.

It has to be that fast because mainframe customers are running tens of thousands of transactions every second, and those transactions typically have service level agreements of a few milliseconds, he said.

“As they take the journey, they recognize how important it is to do those things directly on IBM Z,” Jacobi said. “They don’t have to deal with latency or have to deal with another platform having access to sensitive data. That is a huge advantage in the journey to AI.”