Dave Montgomery is Director, Product Marketing Data Center Solutions, Western Digital Corporation.

The "general-purpose" data center architectures that have served so well in the past are reaching their limits of scalability, performance and efficiency, and use a uniform ratio of resources to address all compute processing, storage and network bandwidth requirements. The one size fits all’ approach is no longer effective for data intensive workloads (e.g. big data, fast data, analytics, artificial intelligence and machine learning). What is required are capabilities that enable more control over the blend of resources that each need so that optimized levels of processing, storage and network bandwidth can be scaled independent of one another. The end objective is a flexible and composable infrastructure.

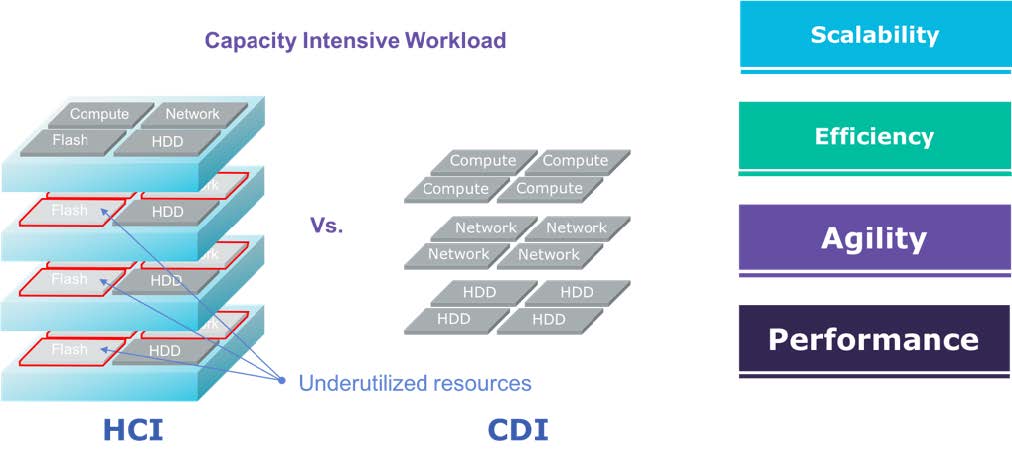

Figure 1: Today’s data-centric architectures

Though hyper-converged infrastructures (HCIs) combine compute, storage and network resources into a single virtualized system (Figure 1), to add more storage, memory or networking, additional processors are required. This creates a fixed building block approach (each containing CPU, DRAM and storage)that cannot achieve the level of flexibility and predictable performance needed in today’s data centers. As such, Composable-Disaggregated Infrastructures (CDIs) are becoming a popular solution as they go beyond converging or hyper-converging IT resources into a single integrated unit, but optimizes them to improve business agility.

The Need for Composable-Disaggregated Infrastructures

Given the challenges associated with general-purpose architectures (fixed resource ratios, underutilization and overprovisioning), converged infrastructures (CIs) emerged delivering a preconfigured hardware resources in a single system. The compute, storage and networking components are discrete and managed through software. CIs have evolved into HCIs where all of the hardware resources are virtualized, delivering software-defined computing, storage and networking.

Though HCIs combine compute, storage and network resources into a single virtualized system, they are not without inefficiencies. For example, scalability limits are defined by the processor, and access to resources are made through the processor. To add more resources, such as storage, memory or networking, HCI architectures provide additional processors even if they are not required resulting in data center architects trying to build flexible infrastructures but are using inflexible building blocks.

From a recent survey of over 300 mid-sized and large enterprise IT users, only 45 percent of total available storage capacity in an enterprise data center infrastructure is provisioned, and only 45 percent of compute hours and storage capacity is utilized. The fixed building block approach demonstrates underutilization and cannot achieve the level of flexibility and predictable performance needed in today’s data center. The disaggregated HCI model needs to be enabled and become easily composable, of which, software tools based on an open application programming interface (API) is the future.

Introducing Composable Disaggregated Infrastructures

A composable disaggregated infrastructure is a data center architectural framework whose physical compute, storage and network fabric resources are treated as services. The high-density compute, storage and network racks use software to create a virtual application environment that provides whatever resources the application needs in real-time to achieve the optimum performance required to meet workload demands. It is an emerging data center segment with a total market CAGR of 58.2 percent (forecasted from 2017 to 2022).

Figure 2: Hyper-Converged vs. Composable

In this infrastructure (Figure 2), virtual servers are created out of independent resource pools comprised of compute, storage and network devices, rather than partitioned resources that are hardwired as HCI servers. Thus, the servers can be provisioned and re-provisioned as needed, under software control, to suit the demands of particular workloads as the software and hardware components are tightly integrated. With an API on the composing software, an application could request whatever resources are needed, delivering real-time server reconfigurations on-the-fly, without human intervention, and a step toward the self-managing data center.

Hyper-Converged vs. Composable

A networked protocol is equally important to a CDI enabling compute and storage resources to be disaggregated from the server and available to other applications. Connecting compute or storage nodes over a fabric is important as it enables multiple paths to the resource. Emerging as the frontrunner network protocol for CDI implementations is NVMe™-over-Fabrics. It delivers the lowest end-to-end latency from application to storage available and enables CDIs to provide the data locality benefits of direct-attached storage (low latency and high-performance), while delivering agility and flexibility by sharing resources throughout the enterprise.

Non-Volatile Memory Express™ (NVMe) technology is a streamlined, high performance, low latency interface that utilizes an architecture and set of protocols developed specifically for persistent flash memory technologies. The standard has been extended beyond local attached server applications by providing the same performance benefits across a network through the NVMe-over-Fabrics specification. This specification enables flash devices to communicate over networks, delivering the same high performance, low latency benefits as local attached NVMe, and there is virtually no limit to the number of servers that can share NVMe-over-Fabrics storage or the number of storage devices that can be shared.

Final Thoughts

Data-intensive applications in the core and at the edge have exceeded the capabilities of traditional infrastructures and architectures especially relating to scalability, performance and efficiency. As general-purpose infrastructures are supported by a uniform ratio of resources to address all compute processing, storage and network bandwidth requirements, they are no longer effective for these diverse and data-intensive workloads. With the advent of CDIs, data center architects, cloud service providers, systems integrators, software defined storage developers, and OEMs, can now deliver storage and compute services with greater economics, agility, efficiency and simplicity at scale, while enabling dynamic SLAs across workloads.

Opinions expressed in the article above do not necessarily reflect the opinions of Data Center Knowledge and Informa.

Industry Perspectives is a content channel at Data Center Knowledge highlighting thought leadership in the data center arena. See our guidelines and submission process for information on participating.