An edge data center is small, lightweight, in many cases surprisingly portable, and designed to withstand extreme climate differences without being staffed by human beings. It’s being marketed to enterprises as an alternative to colocation or the cloud, for specialized applications that require close access to the data being gathered, or stationed at a closer distribution point to the end customer, such as video analysis and pre-processing for remote cameras.

But the usefulness of an edge data center will only be determined by the performance of the edge servers it contains. And what are these things, really?

There are no fewer than three schools of thought with respect to what an edge server should be. They are not alternative strategies to one another — three choices on a shelf, where you pick the best for your particular needs. Each represents a design principle, a direction in which its proponents believe the direction of edge architecture should lead.

- Ruggedization. In many cases, edge data center environments are prone to greater climate extremes than the controlled, tempered, evened-out environments of enterprise facilities. Thus, the proponents of ruggedization argue that special concentrated, modular, and weather-resistant x86 servers offer the best option for reliable performance under pressure.

- Normalization. If it costs too much in the near term to populate edge facilities with premium servers and storage equipment, proponents of this principle say, then even if the long-term benefits are appreciable, you’re probably not going to do it. You might as well devote your energies to modernizing and ruggedizing your facilities, including investing in modular micro data center chasses and populating them with the same servers you know and trust in your colo facilities — which, when you purchase in volume anyway, are considerably cheaper.

- Amortization. Most organizations probably will not send edge infrastructure specialists into the field on a regular repair route, like telephone repairmen in the old days of Western Electric. As a result, the edge will probably be where old systems are put out to pasture. You might not want a multimillion-dollar investment in x86 servers devoted to such applications as measuring currents throughout a wind farm. Given this, amortization proponents say, go ahead and let your old embedded systems and decade-old white boxes live out their last days in the field. Concentrate instead on network connectivity, bandwidth, and having the right software platform to coordinate your islands of misfit toys into cohesive processing units that collectively produce some measure of work value.

Option 1: The ‘Edge-Appropriate’ Approach

In February, Hewlett Packard Enterprise introduced what it called a “compact and ruggedized design” that was best suited for remote and extreme locations: the Edgeline EL8000. Its form factor is described by the company as something telecommunications customers requested for implementation with 5G wireless: a miniature rack that’s 17 inches deep and 8 x 8 inches in the front (5U tall).

HPE’s EL-8000 5U edge server combo. (Image by HPE)

For standard racks, two EL8000 units can be mounted side-by-side. But HPE believes that a great many edge deployments won’t have standard racks; instead they’ll be utility boxes.

“It’s fairly good density,” says Gerald Kleyn, HPE’s senior director of product management. “We have four nodes in each machine, so it can run as an independent cluster out at the edge, so you get that redundancy, resilience and hot-pluggability to make it super-easy to service when you may not have an operator out at the edge who understands which cables go where. So we bill it as an edge-appropriate system.”

Kleyn says the EL8000 has been ruggedized for operating conditions in the range of 0 C to 55 C, which is in line with ASHRAE Class A3 and Class A4 specifications for relative humidity levels between 8 percent and 90 percent. He added that the company has plans in cooperation with its telco customers to test the EL8000’s durability in real-world operating environments.

With the EL8000, HPE is also targeting the petroleum, manufacturing, and transportation industries, but Kleyn admits that with its current immediate need for processing power to accommodate the goals of 5G wireless, the telco market is the real jackpot.

“We see a key need inside of 5G for new levels of compute at the edge to handle the types of workloads that they’re looking at and future applications that’ll be deployed there,” he tells Data Center Knowledge,

Yet as we learned, the EL8000 is no snowflake. It’s essentially a ProLiant DL360 — the enterprise workhorse of HPE’s product line — retooled and rearranged for a more compact chassis. HPE says it’s mindful that telcos won’t be sending their IT specialists into the fields to administer their servers along with their base stations, so they’ll need components that are more easily handled by one person with a poncho and a screwdriver, backed up by a nationwide service fleet capable of repairing or replacing these systems as readily as they would for an enterprise facility.

Option 2: Homogeneity and Practicality

The hyperscale design ethic promoted the ideal of a server being expendable, even disposable. In a large-scale setting, it may be cheaper and more practical to replace low-performing servers rather than repair them. Perhaps all an enterprise’s edge deployments put together constitute a large-scale setting, only geographically dispersed.

By stark contrast to HPE’s position, Dell EMC espouses the notion that an edge server is a server that happens to be in an edge deployment.

“This is an emerging space,” says Ty Schmitt, Dell EMC’s VP of modular solutions. “I think customer usage models are just now beginning to understand what kind of performance implications there are — what performance they get from various form factors of servers, what implications there are for different form factors and different amounts of power consumption. They’re looking at that from a total cost of ownership standpoint, so where and when different form factors of servers might be required, we would have to look at that holistically.”

Dell literally wrote the book on making x86 boxes to order. It created the custom PC industry and extended that model into servers. If customers really needed an exclusive form factor at the edge, Schmitt says, they’d be asking for it. And they’re not.

“It’s got to be something that makes sense as a large-adoption solution, and it’s too soon to tell when and if that’s going to happen,” he says.

Granted, Dell had the word “edge” in its PowerEdge server brands before it was decided that “the edge” was a place. But its context at that time was only in terms of performance, and those requirements may be roughly the same regardless of the server’s location or environment.

Dell Edge Gateway 5100 series. (Image: Dell)

Dell does indeed have its own edge form factor device: the Edge Gateway series (model 5100 shown above). So it does have a horse in the race, but if indeed an edge deployment in all circumstances mandates a server that you wouldn’t install in an ordinary enterprise, then Schmitt believes the jury is still out as to what that may be.

The ways edge servers may be managed and administered in the future may one day mandate the need for a new and exclusive form factor, he says. It’s not out of the realm of feasibility. But even these administrative patterns have yet to adopt their own styles.

“Everyone’s trying to figure out, ‘Do I need to go to an alternative form of cooling? Do I have to use DC power or AC power?’” Schmitt says. “We view this year, and probably into early next year, as the time of POC, the proof-of-concept. There are a lot of component providers, ODMs, OEMs, real estate, an entire industry — you can draw swim lanes up and down the stack — that are trying to understand, what makes sense? What can be optimized? What different form factors, cooling, power methodologies, security — there’s a whole lot of that going on right now because frankly, people are trying to use something in a way that hasn’t been done before and bake it into the TCO model that applies to their business.”

The best way to determine what those differences should be, according to the Dell, may be scientifically: by applying the same servers in both edge and climate-controlled environments and observing the differences in their behaviors and performance. Only then will customers fully comprehend what they need, over and above what they think they want.

Option 3: Pot Luck

The edge is already established — it’s not some empty shell waiting to have ProLiant or PowerEdge servers installed in it. And a great many of its processors are smaller and lower-power than what you’d find in your smartphone — for example, dual-core Intel Atom CPUs.

So rather than upgrade everything you already have in the field to an x86, a company called NodeWeaver advises you take an altogether different approach: Build a reliable network behind everything you currently have in the field – old and new, dual-core and multi-core – then manage all of those assets using a back-end platform that treats them all like components of a cohesive whole, like a cloud.

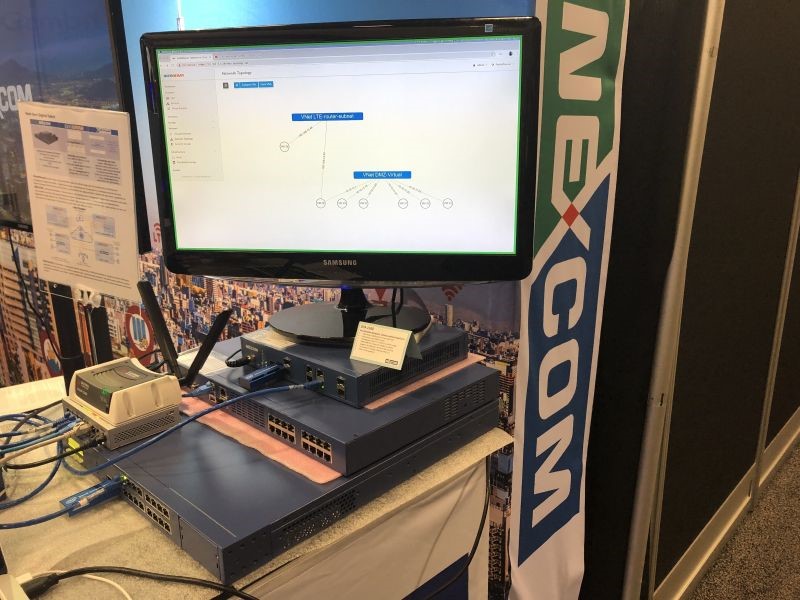

A typical NodeWeaver installation at work managing a handful of small edge-class devices. (Photo: ScaleWize)

“The bare minimum for a system that can run applications is two physical cores and 8 gigabytes of memory,” says Carlo Daffara, NodeWeaver’s co-founder and CEO. Think of NodeWeaver as a VMware vSphere-like platform that pools together the processing capabilities, limited though they may be, of up to 30 devices, enabling them to perform one set of jobs as a cluster.

“The system will turn all the storage it finds into a single sea of data,” Daffara says. “You don’t see an individual location; you see a global pool of storage and you can decide how many replicas you want — because you may lose a node, or a disk may disappear from the network — and what kind of replica. And that’s basically it.”

The whole value proposition for edge computing is based around minimizing latency by leveraging processing power as close to the point of data receipt – or otherwise to the point of data delivery – as possible. NodeWeaver would at first appear to contradict that whole proposition by networking edge devices that are not near one another so they can execute the tasks that made edge computing necessary to begin with.

Daffara counters this with a compelling argument: If we consider the edge as a network in itself, then the closer we bring that network to the critical points of acquisition or delivery, the more efficient that system will become on the other side of the edge network.

“We delivered a system for controlling energy production in a wind farm in Egypt,” the CEO told us. “The ability to use small boxes that can be bolted directly onto the main production site and then require only an internet cable with power-over-enternet to light up the device means that this kind of deployment can be done in one hour [or] two hours instead of a few days. You can buy any kind of hardware device on the market, even in commodity exchanges for industrial systems, and cabling can be done by anyone with a minimum of electrical capability.”

The same formula that OpenStack used for leveraging ordinary servers to produce a common cloud infrastructure, Daffara argues, can be put to work on the other side of the equation — leveraging very ordinary devices to produce a common edge infrastructure.

If edge computing becomes an industry unto itself, then its evolution will eventually follow one of these three paths. Just one, not all at once.