Until now, the storage and memory hierarchy in the data center has been the familiar set of DRAM for the hot tier, flash and SSD for the warm tier, and hard drive and tape for the cold tier. Persistent memory, which is arriving at scale this year in the shape of Intel’s Optane Data Center SSDs, changes that hierarchy significantly.

Think of it as bringing hot-tier capabilities to a (pricey) SSD in a DIMM format that fits into a DRAM socket, offering perhaps slightly lower speeds than DRAM. It doesn’t have the latency of being on the PCIe bus and can connect over 3TB of memory to each CPU socket, but like flash, the data doesn’t go away if the power is turned off.

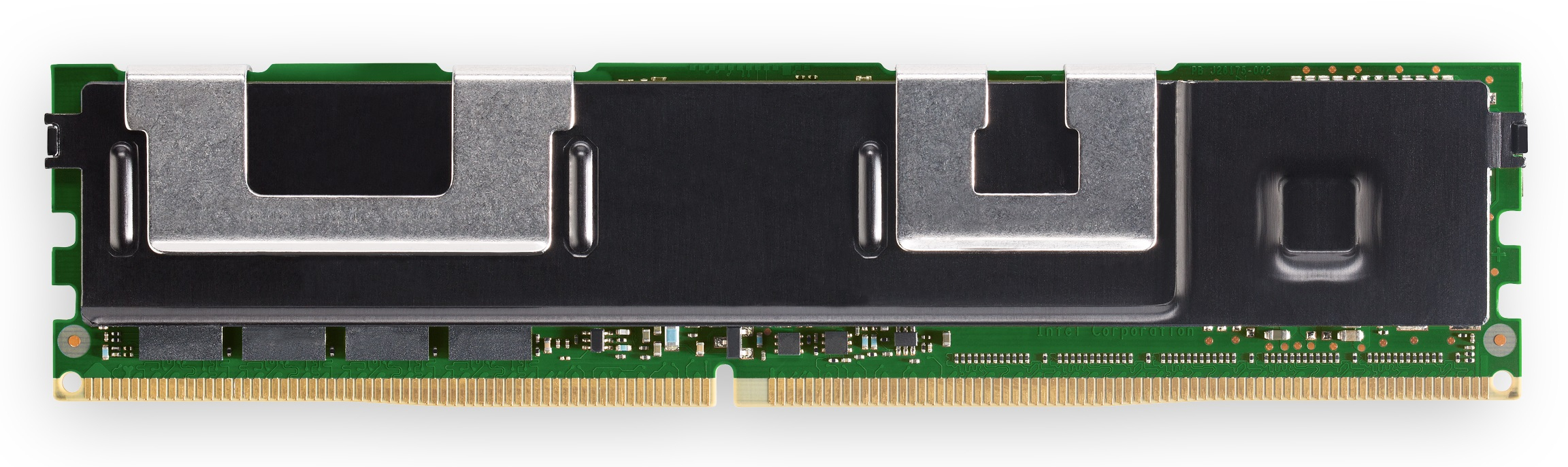

Last week Intel announced data center-class memory modules in sizes of 128GB, 256GB, and 512GB with hardware encryption for data security and advanced error correction. They’re sampling to partners now and will be available later in the year, the company said. While he wouldn’t commit to specific dates, Intel corporate VP Bill Leszinske told Data Center Knowledge at the launch event that we could expect to see real applications running on Optane memory in production in 2018. (Developers will be able to try their code out on Optane systems run by Intel in the next few weeks by applying for access through the Intel Builders Construction Zone.) He also wouldn’t disclose pricing or endurance, saying only that Intel is designing the product so it will be operational throughout its expected lifecycle.

A Fundamental Rethink

Taking advantage of that properly will take more than upgrading existing servers with the new Xeon processors Intel says Optane will need to pair with. It also means fundamentally rethinking how applications and storage systems are architected, because so many things change when memory doesn’t get erased.

Alper Ilkbahar, the general manager of Intel’s data center memory group and storage solutions, was keen to emphasize the opportunities of rearchitecting for DAX direct access, especially for large in-memory databases that can stop depending on the file system. Oracle, Microsoft, Redis, Aerospike, SAP, RocksDB, and Cassandra databases already support persistent memory models.

Intel Optane persistent memory

“When an application tries to access memory, it makes a hardware call, and if the data is not in the cache, the memory controller makes a cache line access call to DDR RAM, which is still very low-latency: 100 nanoseconds or so,” Ilkbahar said. “You get the data back quickly, and the 64K cache line is a good fit to deliver small granularity of data back to the CPU – so nothing stalls [in the application].” The disadvantage is the limited capacity and the cost of implementation.

“As we’re dealing with more and more data in data-intensive applications, you have to extend beyond the limit of DRAM,” he said. “But software reaches into the storage tier through very different mechanisms. Rather than making a direct hardware call the application makes a software call to the kernel, and rather than hitting the memory it goes through a lengthy process of kernel software, which can be tens of thousands of [CPU] cycles. That grabs data from maybe a very slow media and gets it back in chunks of blocks that are 4K or so. What was nanoseconds is now three orders of magnitude longer access time.”

With persistent memory, applications can go directly to the hardware and access storage over the DDR, bus as if it was memory rather than using the file system. That means developers don’t have to think about accessing memory and storage over different tiers. “You have DRAM-like speeds that are sitting on a DDR bus attached to the CPU, so it’s hardware accessible and byte accessible with lower cost and persistence.” And less software involvement in access to persistent memory means less CPU usage just for storage operations.

Intel demonstrated restarting a server running Aerospike’s in-memory database (which typically needs as much RAM as a quarter of its flash capacity for indexes) on a server with the new Optane persistent memory. The restart took only 16.9 seconds, compared to 35 minutes on a server with DRAM and Flash.

Those kinds of restart speeds would dramatically speed up patching and updating the OS and the applications. “We have customers who tell us, ‘We can’t afford to take systems down for maintenance, so we live on the old software’,” Ilkbahar noted.

The new architecture also improves availability – the faster startup and higher throughput could change a three-nines availability to five nines – and increases the number of users that can be supported on the system. As you scale out systems, the limits will change from running out of memory to being CPU-bound.

Unlike flash, where you have to erase before you can write (and often have to wait for write transactions to complete) the 3D XPoint technology inside Optane is more symmetrical on reads and writes, performing in-place writes without needing to erase first. That means the latency doesn’t degrade under load either.

Server operating systems like Windows Server, Ubuntu, and Red Hat Linux already support persistent memory, as do hyperconverged systems like Cisco Hyperflex and Microsoft’s Storage Spaces Direct. If you want to use it in a storage fabric, persistent memory already works over RDMA, but as the standard wasn’t designed for it, a new persistent memory over fabric (PMOF) standard is in development. This will bypass the usual CPU caches (there’s no need to flush the CPU caches to ensure the data persists), but applications will need to be rewritten to use the persistent memory development kit to support persistent memory replication.

Applications and administrators will also need new ways of flushing memory when simply turning the system on and off doesn’t do that. “If you have a memory leak and you have persistent memory, you have a persistent memory leak,” Intel software architect Andy Rudoff admitted to us. Apps may also look as if they're still running, when what you’re actually seeing is the persistent memory. The fast way to flush memory is to throw away the encryption key, but that would cause problems for applications with shared memory or multi-tenant systems; the eventual solution may look more like the TRIM function used in SSDs. In general, he said, “there’s a tremendous amount of work that needs to happen here”.

RAM Bottlenecks in Collider Data

That might hold up adoption, Alberto Pace, head of data management at CERN told us. The European research organisation that operates the Large Hadron Collider is looking at Optane to handle the 1GB-per-second stream of data it collects from systems like the LHC and makes available to researchers around the world. “For performance, we went into in-RAM databases, where we hit the limits of how much RAM we can have – and it takes hours to reboot the machines.”

Optane would help there, but CERN also develops and distributes open source software and manages worldwide collaboration to analyze its data. “We use a well-established traditional architecture,” Pace noted. “We really see the need for this major leap forward, but [we are investigating] to see if we are able to use the existing drivers to run the existing applications unchanged, which would allow a very fast deployment, or if the applications must be redesigned or re-architected, and this will clearly take more time.”

On the other hand, Yiftach Shoolman, the CIO OS Redis Labs, told us, “We’ve got multiple requests from customers to switch [to persistent memory] almost immediately.” And Aerospike CTO Brian Bulkowski suggested that the real advantage could be provisioning-time improvements for scale-out Database-as-a-Service offering. “Just about every database vendor is thinking about or has implemented DBaaS. When I want to just click on a button and get half a terabyte in my favorite public cloud, I care about what price it is and how fast will it be provisioned.” With persistent memory like Optane, he expects the speeds to go up and the prices to go down.