Cameron Brett is Director, Solutions Marketing, for QLogic.

CAMERON BRETT

CAMERON BRETTQLogic

Today’s mission-critical database applications, such as Oracle Real Application Clusters (RAC), feature Online Transaction Processing (OLTP) and Online Analytical Processing (OLAP) workloads that demand the highest levels of performance from servers and their associated shared SAN storage infrastructure. The introduction of multi-processor CPUs coupled with virtualization technologies provides the compute resources required to meet these demanding workload requirements within Oracle RAC servers, but increase the demand for high-performance, low-latency, scalable I/O connectivity between servers and shared SAN storage. Flash-based storage acceleration is a high-performance, scalable technology solution that meets this ever-growing requirement for I/O.

Flash-based storage acceleration is implemented via one of two fundamentally different approaches: first - flash-based technology as the end-point storage capacity device in place of spinning disk, and second - flash-based technology as an intermediate caching device in conjunction with existing spinning disk for capacity. Solutions which utilize flash-based technology for storage are now widely available in the market. These solutions, which include flash-based storage arrays and server-based SSDs, do address the business-critical performance gap, but they use expensive flash-based technology for capacity within the storage infrastructure and require a redesign of the storage architecture.

A new class of cache-based storage acceleration via server-based flash integration, known as a Caching SAN Adapter, is the latest innovation in the market. Caching SAN adapters use flash-based technology to address the business-critical performance requirement, while seamlessly integrating with the existing spinning disk storage infrastructure. Caching SAN adapters leverage the capacity, availability and mission-critical storage management functions for which enterprise SANs have historically been deployed.

Adding large caches directly into servers with high I/O requirements places frequently accessed data closest to the application, “short stopping" a large percentage of the I/O demand at the network edge where it is insensitive to congestion in the storage infrastructure. This effectively reduces the demand on storage networks and arrays, improving storage performance for all applications, (even those that do not have caching enabled), and extends the useful life of existing storage infrastructure. Server-based caching requires no upgrades to storage arrays, no additional appliance installation on the data path of critical networks, and storage I/O performance can scale smoothly with increasing application workload demands.

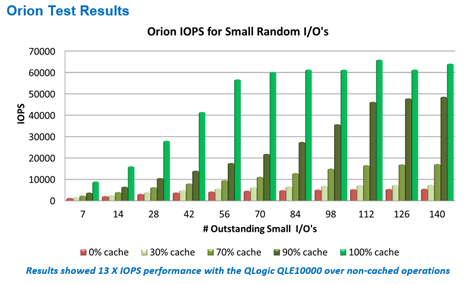

To verify the effectiveness of a Caching SAN adapter, Oracle’s ORION workload tool was used to mimic and stress a storage array in the same manner as applications designed with an Oracle back-end database. Within the test, the Caching SAN adapter displayed the required performance scalability – 13x IOPS improvement over non-cached operations – to support the unique requirements of virtualized and clustered environments such as Oracle RAC.

Orion Test Results

In order to support solutions spanning multiple physical servers (including clustered environments such as Oracle RAC) caching technology requires coherence between caches. Traditional implementations of server-based flash caching do not support this capability, as the caches are “captive” to their individual servers and do not communicate with each other. While they are very effective at improving the performance of individual servers, providing storage acceleration across clustered server environments or virtualized infrastructures which utilize multiple physical servers is beyond their reach. This limits the performance benefits to a relatively small set of single server applications.

Addressing the Drawbacks

Caching SAN adapters take a new approach to avoiding the drawbacks of traditional caching solutions. Rather than creating a discrete captive-cache for each server, the flash-based cache can be integrated with a SAN HBA featuring a cache coherent implementation which utilizes the existing SAN infrastructure to create a shared cache resource distributed over multiple servers. This eliminates the single server limitation for caching and opens caching performance benefits to the high I/O demand of clustered applications and highly virtualized environments.

This new technology incorporates a new class of host-based, intelligent I/O optimization engines that provide integrated storage network connectivity, a Flash interface, and the embedded processing required to make all flash management and caching tasks entirely transparent to the host. All "heavy lifting" is performed transparently onboard the caching HBA by the embedded multi-core processor. The only host-resident software required for operation is a standard host operating system device driver. In fact, the device appears to the host as a standard SAN HBA and uses a common HBA driver and protocol stack that is the same as the one used by the traditional HBAs that already make up the existing SAN infrastructure.

Finally, the new approach guarantees cache coherence and precludes potential cache corruption by establishing a single cache owner for each configured LUN. Only one caching HBA in the accelerator cluster is ever actively caching each LUN’s traffic. All other members of the accelerator cluster process all I/O requests for each LUN through that LUN’s cache owner, so all storage accelerator cluster members work off the same copy of data. Cache coherence is guaranteed without the complexity and overhead of coordinating multiple copies of the same data.

By clustering caches and enforcing cache coherence through a single LUN cache owner, this implementation of server-based caching addresses all of the concerns of traditional server-based caching and makes the caching SAN adapter the right choice for implementing flash-based storage acceleration in Oracle RAC environments.

Notes:

Details on Orion – Oracle database application performance test: Orion is an I/O metrics testing tool which has been specifically designed to simulate workloads using the same Oracle software stack as the Oracle database application.

The following types of workloads are supported currently:

- Small Random I/O: Best if you are testing it for an OLTP database to be installed on your system. Orion generates random I/O workload with a known percentage of read versus writes, given I/O size, and a given number of outstanding I/Os.

- Large Sequential Reads: Typical DSS (Decision Support systems) or Data Warehousing applications, bulk copy, data load, backup and restore are typical activities that will fall into this category.

- Large Random I/O: Sequential streams access disks concurrently and with disk striping (RAID that is); a sequential stream is spread across multiple disks, thus at disk level you may see multiple streams as random I/Os.

- Mixed workloads: A combination of small random I/O and large sequential I/Os or even large random I/Os that allow you to simulate OLTP workloads of fixed random reads/writes and 512KB for backup workload of sequential streams.

- At 100% cache coverage QLogic solution exhibits an average of 11x IOPS improvement over the range of the testing, with a maximum 13x.

Industry Perspectives is a content channel at Data Center Knowledge highlighting thought leadership in the data center arena. See our guidelines and submission process for information on participating. View previously published Industry Perspectives in our Knowledge Library.